For her PhD in Cinema and Media Arts as a Vanier Scholar at York University's School of the Arts, Media, Performance and Design, Alison recently defended a research-creation doctoral project titled "The Shadowpox Storyworld as Citizen Science Fiction: Building Co-Immunity through Participatory Mixed-Reality Storytelling." She is currently a postdoctoral research fellow at the Global Strategy Lab in the Dahdaleh Institute for Global Health Research.

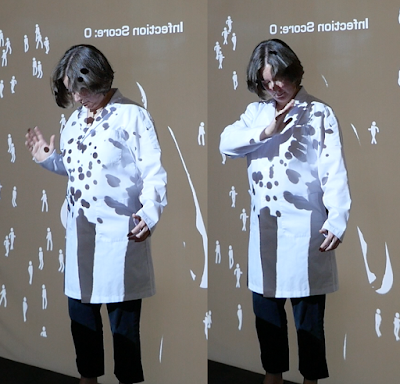

Shadowpox formed part of the multi-year interdisciplinary initiative <Immune Nations>. In a review of its exhibition during the World Health Assembly in Geneva, Switzerland, The Lancet called Shadowpox "undoubtedly one of the most powerful and playful ways to illustrate both the individual and population-level implications of community immunity." (Please see shadowpox.org for more.)

Alison has designed and taught two courses in York’s Department of Cinema and Media Arts. Digital Culture: Science and Fiction (Winter 2021) was a special edition of CMA 3841 based on the “courseplay” concept developed in her research, in which students created characters volunteering for a Phase I vaccine trial at the height of the Shadowpox pandemic, exploring online misinformation and science literacy through video roleplay as well as through reading and writing. The following year, she designed and taught two terms of CMA 1123 Writing for Games and Interactive Media (Winter and Fall 2022), a new course required of all first-year Media Arts students.

Alison holds a BA from Wellesley College in American studies (political and legal thought) with a minor in studio art; an MA in interactive multimedia from the Royal College of Art; and an MFA in theatre directing from York University, where her thesis production of A Midsummer Night's Dream used motion-capture technology to weave real-time 3D computer animation and digital effects into live performance.

She started her career as an intern at Marvel Comics, worked as a writer on 115 episodes of Global TV's improvised "instant drama" Train 48 (where she initiated one of the earliest transmedia blogs in a TV series), and produced one of the first alternate reality games

for Douglas Adams's Starship Titanic (whose community-created storyworld has continued to evolve for two decades, as chronicled in The Economist: "Emergent systems: The forum at the end of the universe"). As a story editor for Sarrazin Couture Entertainment, she developed drama series for CBC, CTV and ABC, and as a research associate at York University helped with the launch of a new collaborative e-learning system.

She started her career as an intern at Marvel Comics, worked as a writer on 115 episodes of Global TV's improvised "instant drama" Train 48 (where she initiated one of the earliest transmedia blogs in a TV series), and produced one of the first alternate reality games

for Douglas Adams's Starship Titanic (whose community-created storyworld has continued to evolve for two decades, as chronicled in The Economist: "Emergent systems: The forum at the end of the universe"). As a story editor for Sarrazin Couture Entertainment, she developed drama series for CBC, CTV and ABC, and as a research associate at York University helped with the launch of a new collaborative e-learning system.

She started her career as an intern at Marvel Comics, worked as a writer on 115 episodes of Global TV's improvised "instant drama" Train 48 (where she initiated one of the earliest transmedia blogs in a TV series), and produced one of the first alternate reality games

for Douglas Adams's Starship Titanic (whose community-created storyworld has continued to evolve for two decades, as chronicled in The Economist: "Emergent systems: The forum at the end of the universe"). As a story editor for Sarrazin Couture Entertainment, she developed drama series for CBC, CTV and ABC, and as a research associate at York University helped with the launch of a new collaborative e-learning system.

She started her career as an intern at Marvel Comics, worked as a writer on 115 episodes of Global TV's improvised "instant drama" Train 48 (where she initiated one of the earliest transmedia blogs in a TV series), and produced one of the first alternate reality games

for Douglas Adams's Starship Titanic (whose community-created storyworld has continued to evolve for two decades, as chronicled in The Economist: "Emergent systems: The forum at the end of the universe"). As a story editor for Sarrazin Couture Entertainment, she developed drama series for CBC, CTV and ABC, and as a research associate at York University helped with the launch of a new collaborative e-learning system.Theatre work includes assistant directing with Katie Mitchell at the Royal Court Theatre, Stephen Unwin at the English Touring Theatre, and Carey Perloff at the American Conservatory Theater and the Stratford Shakespeare Festival (where she won the Elliott Hayes Award). Directing includes the UK premieres of James Reaney's The Donnellys at the Old Red Lion Theatre and Normand Chaurette's The Queens at the Royal Shakespeare Company Fringe Festival in Stratford-on-Avon. Alison was director and co-writer with Pascal Langdale on two interactive live-animated sci-fi theatre projects: Faster than Night (Harbourfront Centre, Toronto), and The Augmentalist (Augmented World Expo, Silicon Valley).

Presentations include:

• "Embodying the Uncanny" at the Microscopic Life in 20th- and 21st-Century Performance conference, Fondation Maison des Sciences de L’Homme, Paris.

• "Shadowcasting from Manitoulin to Masiphumelele" at the Film and Media Studies Association of Canada conference, Congress of the Humanities and Social Sciences, York University.

• "Shadowpox: Citizen Science Fiction" as part of the exhibition "Life, A Sensorium," International Symposium of Electronic Art, Montreal.

• "Building Co-Immunity: Participatory Science Fiction to Inoculate the Civic Imagination" at the Humanities, Arts, Science, and Technology Alliance and Collaboratory conference, University of British Columbia.

• "Shadowpox: Participatory Science Fiction Storytelling to Boost Community Immunity" at the Desmond Tutu HIV Foundation Research Meeting, Institute of Infectious Disease & Molecular Medicine, University of Cape Town, South Africa

• "VFX, Infection, and Affect in a Co-Created Superhero Storyworld" at the conference Emergency and Emergence, School of Cinematic Arts, University of Southern California

• "Imagination as In-Oculation – Shadowpox: The Antibody Politic" at Ideas Digital Forum, McLaughlin Gallery, Oshawa, Ontario

• "Imagination, Inoculation and the Politics of Co-immunity" at Cultures of Participation – Arts, Digital Media and Politics, Aarhus University, Aarhus, Denmark

• "Sci-Fi Insights on Co-Immunity" at The Art and Science of Immunization, Jackman Humanities Institute, University of Toronto

• "Perils and Promises: Arts, Health, and Public Participation" at Creating Space: Health Humanities and Social Accountability in Action, Montreal, Quebec

• "The Technologized Playwright" at the Playwrights Guild of Canada Conference, Montreal, Quebec

Alison has been a research associate with Caitlin Fisher's Immersive Storytelling Lab, a member of the University of Toronto's Jackman Humanities Institute Working Group The Art and Science of Immunization, a member of the executive committee for York University's Sensorium: Centre for Digital Arts and Technology, and a HASTAC Scholar with the Humanities, Arts, Science, and Technology Alliance and Collaboratory.

Her blog is an erratically-updated collection of thoughts and pixels, sometimes even mentioning her dissertation research, but just as often not.

• "Shadowpox: Participatory Science Fiction Storytelling to Boost Community Immunity" at the Desmond Tutu HIV Foundation Research Meeting, Institute of Infectious Disease & Molecular Medicine, University of Cape Town, South Africa

• "VFX, Infection, and Affect in a Co-Created Superhero Storyworld" at the conference Emergency and Emergence, School of Cinematic Arts, University of Southern California

• "Imagination as In-Oculation – Shadowpox: The Antibody Politic" at Ideas Digital Forum, McLaughlin Gallery, Oshawa, Ontario

• "Imagination, Inoculation and the Politics of Co-immunity" at Cultures of Participation – Arts, Digital Media and Politics, Aarhus University, Aarhus, Denmark

• "Sci-Fi Insights on Co-Immunity" at The Art and Science of Immunization, Jackman Humanities Institute, University of Toronto

• "Perils and Promises: Arts, Health, and Public Participation" at Creating Space: Health Humanities and Social Accountability in Action, Montreal, Quebec

• "The Technologized Playwright" at the Playwrights Guild of Canada Conference, Montreal, Quebec

Alison has been a research associate with Caitlin Fisher's Immersive Storytelling Lab, a member of the University of Toronto's Jackman Humanities Institute Working Group The Art and Science of Immunization, a member of the executive committee for York University's Sensorium: Centre for Digital Arts and Technology, and a HASTAC Scholar with the Humanities, Arts, Science, and Technology Alliance and Collaboratory.

Her blog is an erratically-updated collection of thoughts and pixels, sometimes even mentioning her dissertation research, but just as often not.